Fine-tune Mask-RCNN on a Custom Dataset¶

In an earlier post, we've seen how to use a pretrained Mask-RCNN model using PyTorch. Although it is quite useful in some cases, we sometimes or our desired applications only needs to segment an specific class of object which may not exist in the COCO categories. Therefore, we need to train a customized Mask-RCNN model to meet out demand.

In this post, We will see how to fune-tune Mask-RCNN on a custom dataset. I will cover the processing pipeline from how to prepare a custom dataset to model funtuning and evaluation. It will be very useful, so keep reading.

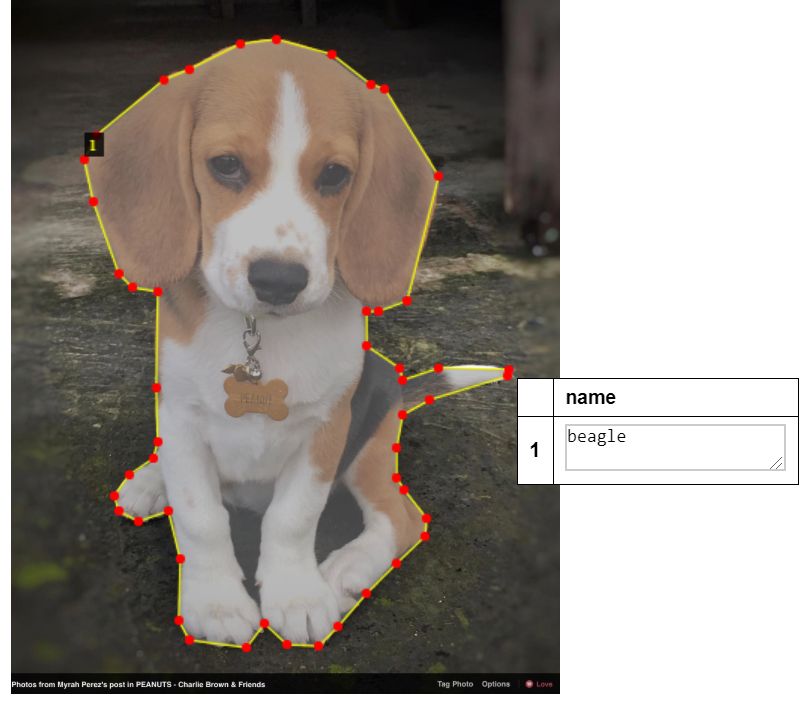

I've prepared a very small Beagle dataset, and of course I've also put the annotated data in the dataset. Feel free to download it from this link.

Step 1: Preparing the Dataset¶

The dataset I prepared contains a total number of 100 beagle images which I scraped from Google Image. 75 of them are used for training and 25 of them are used for validation.

I used VGG Image Annotator (VIA) to annotate the training and validation images. Its a simple tool and it labels all the images and exports it to a single JSON file.

{:height="60%" width="60%"}

{:height="60%" width="60%"}

Step 2: Install Dependencies¶

Fisrt we need to downgrade tensorflow to 1.15.0 and keras to 2.2.5 in order to use Matterport's implementation of Mask-RCNN. I do this because I'm using Google Colab to do the experiment.

!pip install tensorflow-gpu==1.15.0

!pip install keras==2.2.5

Then we clone matterport's implementation of Mask-RCNN and download the pretraind weights trained on COCO dataset. We are going to fine-tune the weights using our own dataset.

!git clone https://github.com/matterport/Mask_RCNN.git

%cd Mask_RCNN/

!python setup.py install

!wget https://github.com/matterport/Mask_RCNN/releases/download/v2.0/mask_rcnn_coco.h5

I've also cloned my prepared dataset to Google Colad. If you're not using Google Colab, you don't need to do that. This repo also contains the beagle.py which used for configure the model, load data, train and evaluate the model. I refereced this article.

%cd ..

!git clone https://github.com/haochen23/fine-tune-MaskRcnn.git

%cd fine-tune-MaskRcnn/

Step 3: Modify beagle.py for Our Own Dataset¶

Fisrt, modify the following 3 functions in beagle.py:

def load_custom(self, dataset_dir, subset):

def load_mask(self, image_id):

def image_reference(self, image_id):

Raplace 'beagle' with your custom class name in these functions.

Second, modify

class CustomConfig(Config):

"""Configuration for training on the toy dataset.

Derives from the base Config class and overrides some values.

"""

# Give the configuration a recognizable name

NAME = "beagle"

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # Background + beagle

# Number of training steps per epoch

STEPS_PER_EPOCH = 100

# Skip detections with < 90% confidence

DETECTION_MIN_CONFIDENCE = 0.9

Step 4: Training¶

Now we are ready to train the mode. If you don't have a GPU, you can also use Google Colab. I only trained the model for 10 epochs, you can modify the number of epochs in beagle.py.

!python3 beagle.py train --dataset=beagle --weights=coco

Step 5: Inference using the Trained Model¶

%matplotlib inline

import os

import sys

import random

import math

import re

import time

import numpy as np

import tensorflow as tf

import matplotlib

import matplotlib.pyplot as plt

import matplotlib.patches as patches

ROOT_DIR = os.path.abspath("../")

from mrcnn import utils

from mrcnn import visualize

from mrcnn.visualize import display_images

import mrcnn.model as modellib

from mrcnn.model import log

import beagle

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

MODEL_WEIGHTS_PATH = ROOT_DIR +"/beagle_mask_rcnn_coco.h5"

Setup configurations¶

config = beagle.CustomConfig()

BEAGLE_DIR = ROOT_DIR+"/fine-tune-MaskRcnn/beagle"

# Override the training configurations with a few

# changes for inferencing.

class InferenceConfig(config.__class__):

# Run detection on one image at a time

GPU_COUNT = 1

IMAGES_PER_GPU = 1

config = InferenceConfig()

config.display()

# set target device

DEVICE = "/gpu:0" # /cpu:0 or /gpu:0

def get_ax(rows=1, cols=1, size=16):

"""Return a Matplotlib Axes array to be used in

all visualizations in the notebook. Provide a

central point to control graph sizes.

Adjust the size attribute to control how big to render images

"""

_, ax = plt.subplots(rows, cols, figsize=(size*cols, size*rows))

return ax

Load validation set¶

dataset = beagle.CustomDataset()

dataset.load_custom(BEAGLE_DIR, "val")

# Must call before using the dataset

dataset.prepare()

print("Images: {}\nClasses: {}".format(len(dataset.image_ids), dataset.class_names))

Create model in inference mode and load our trained weights¶

# Create model in inference mode

with tf.device(DEVICE):

model = modellib.MaskRCNN(mode="inference", model_dir=MODEL_DIR,

config=config)

weights_path = "../logs/beagle20200618T0317/mask_rcnn_beagle_0010.h5"

# Load weights

print("Loading weights ", weights_path)

model.load_weights(weights_path, by_name=True)

Inference on test images¶

image_id = random.choice(dataset.image_ids)

image, image_meta, gt_class_id, gt_bbox, gt_mask =\

modellib.load_image_gt(dataset, config, image_id, use_mini_mask=False)

info = dataset.image_info[image_id]

print("image ID: {}.{} ({}) {}".format(info["source"], info["id"], image_id,

dataset.image_reference(image_id)))

# Run object detection

results = model.detect([image], verbose=1)

# Display results

ax = get_ax(1)

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'],

dataset.class_names, r['scores'], ax=ax,

title="Predictions")

log("gt_class_id", gt_class_id)

log("gt_bbox", gt_bbox)

log("gt_mask", gt_mask)

image_id = random.choice(dataset.image_ids)

image, image_meta, gt_class_id, gt_bbox, gt_mask =\

modellib.load_image_gt(dataset, config, image_id, use_mini_mask=False)

info = dataset.image_info[image_id]

print("image ID: {}.{} ({}) {}".format(info["source"], info["id"], image_id,

dataset.image_reference(image_id)))

# Run object detection

results = model.detect([image], verbose=1)

# Display results

ax = get_ax(1)

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'],

dataset.class_names, r['scores'], ax=ax,

title="Predictions")

log("gt_class_id", gt_class_id)

log("gt_bbox", gt_bbox)

log("gt_mask", gt_mask)

Summary¶

In this post, we've how to fine-tune a custom Mask-RCNN model on my prepared Beagle dataset. I've walked you through the entire training process, from preparing the dataset to how to perform inference using your own model.

I hope you guys find this post useful. The code and dataset used in this post are availbe in my GitHub Repo.

Comments

comments powered by Disqus